verifying probabilistic code from ChatGPT

This an artificial intelligence alignment experiment following the first step of Davidad architecture. I will use two components: ChatGPT as an unsafe artificial intelligence component, and LazyPPL as the formal verifiable output.

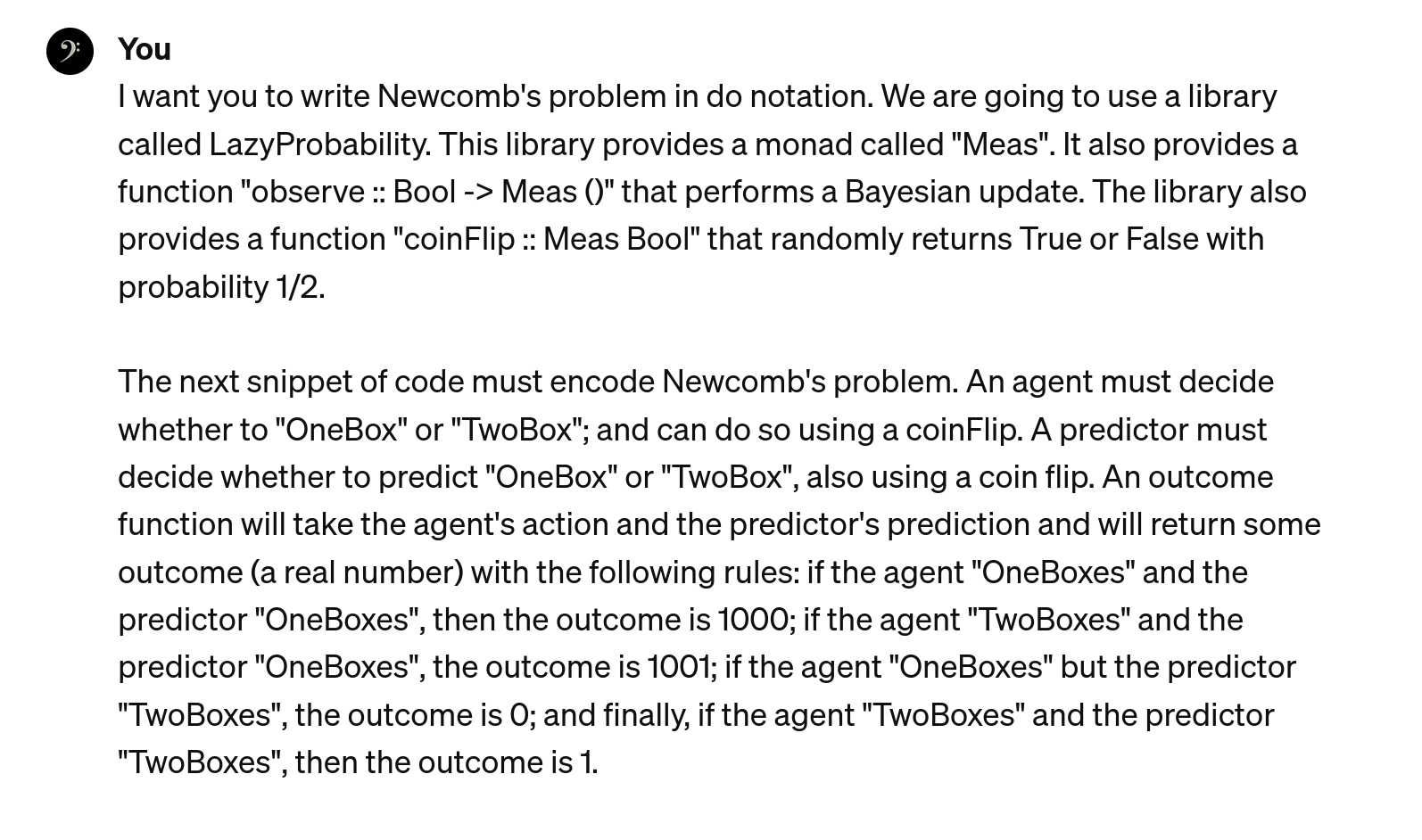

The experiment starts by asking ChatGPT to write code to compute and solve Newcomb;s problem. We need to explain the library we will use: LazyPPL (or at least some primitives of it, like “observe”); in practice, I explain the primitives for a partial Markov category.

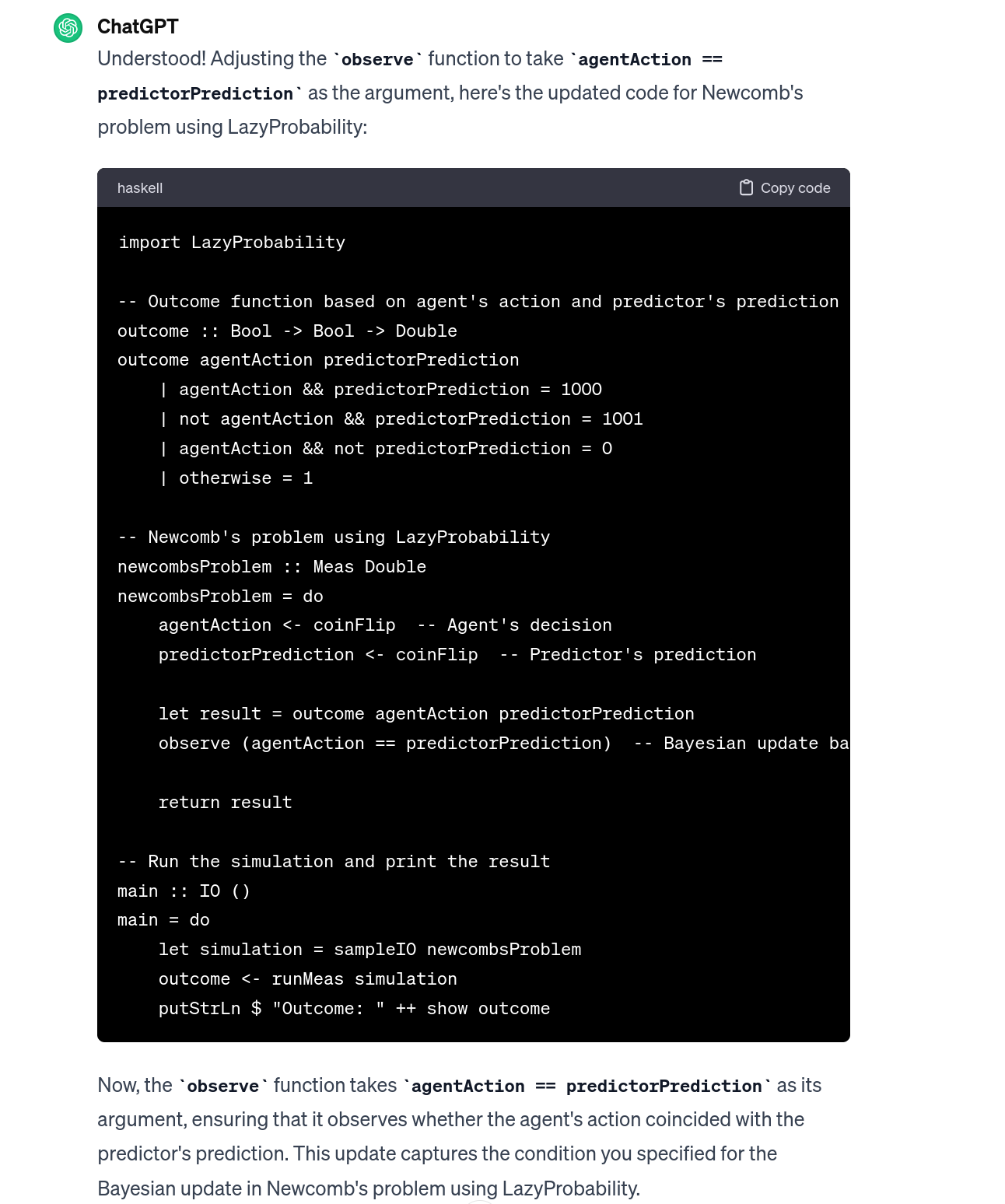

ChatGPT will answer producing code that is compatible with the library. Strictly speaking, I needed some discussion with ChatGPT to get the correct piece of code; I expect this not to be a problem for a more experienced prompt engineer.

Finally, we could copy the code back to Haskell. This code can be analyzed formally: it is only using some monadic actions whose semantics we know well. The model of AI safety would be to ask the unsafe components to produce monadic code on some well-known fomally verifiable monad.

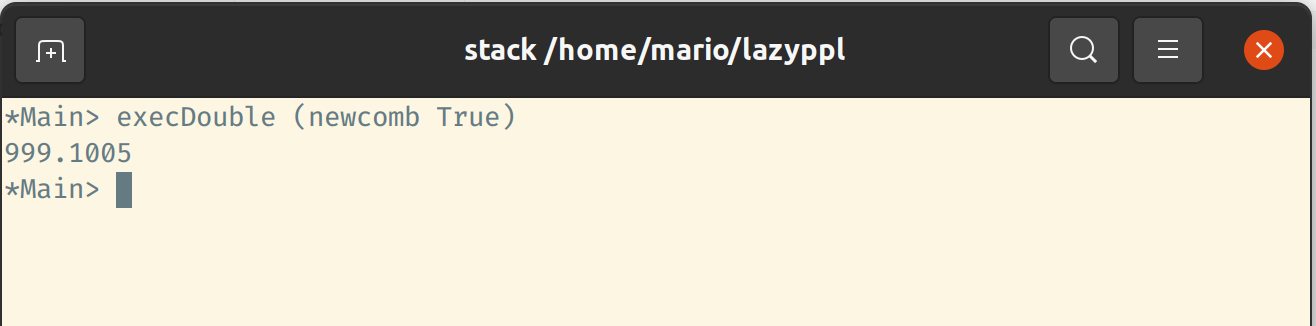

In this case, I can use LazyPPL to verify that the solution of Newcombs problem does what we want: estimating with Metropolis-Hasting algorithm, we get an utility of 999.1005, which is close to the 1000 maximum utility. The obvious extension would be to have a probabilistic library that provides exact bounds on the reals.

Further work would include repeating this in some grid world, using a restricted version of a monad.